Is Your Workforce Ready for Generative AI Adversaries?

Defending Against the Deep

By Tucker Mahan, Director of Emerging Technology, with Barbara Brutt

- Posted:

- Businesses

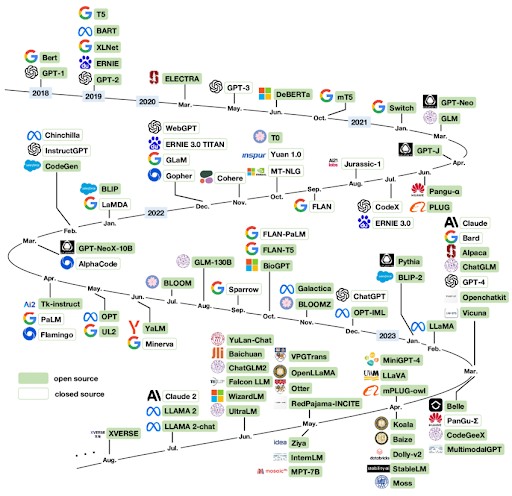

AI is everywhere, and the quickest history lesson on computers demonstrates that artificial intelligence has been in the works since Turing’s 1950 “Computing Machinery and Intelligence” paper introduced the conceptual foundations. OpenAI’s first LLM release, GPT-1, came out in 2018 alongside Google’s Bert. In the span of one human life of about seventy years, AI has taken off.

Ideas around AI have skyrocketed. For example, a commonly believed idea is that “90% of the internet will be AI by 2025 or 2026!” Dig deeper into the source material of this statistic, and it becomes apparent that this information comes from a since redacted quote in a Europol report. Yet, the information still persists because many news organizations used that information, which then fed into what AI knows. If you search for this information online, AI will offer a vague summary, “Some experts predict that as much as 90 percent of online content could be synthetically generated within a few years.”

The ability to analyze information has only become more critical. According to Ernst & Young in “Why AI fuels cybersecurity anxiety, particularly for younger employees,” their 2024 EY Human Risk in Cybersecurity Survey revealed the following:

- 39% of workers are not confident that they know how to use AI responsibly

- 91% of employees say organizations should regularly update their training to keep pace with AI

- 85% of workers believe AI has made cybersecurity attacks more sophisticated

The Threat of Generative AI Adversaries

Google warns that the effectiveness and scale of social engineering and phishing attacks are not only growing but will continue to grow. Generative AI adversaries are here now, and it’s important to learn from the stories of the attacks that we already know of and discover how to educate your workforce.

What happens when one of your employees cannot spot a deepfake scam? Arup, a British multinational design and engineering company can tell you because one of its Hong Kong employees paid out $25 million to fraudsters.

“Unfortunately, we can’t go into details at this stage as the incident is still the subject of an ongoing investigation. However, we can confirm that fake voices and images were used,” the spokesperson said in an emailed statement. The worker had suspected phishing, but after a video call where the worker recognized colleagues by image and voice, the suspicions of a scam were dropped. It’s rumored that the video call itself included several deepfake streams of company leaders, used to manipulate the employee.

What happens when a threat actor uses social engineering to access important company information? Reuters reports in “Casino giant MGM expects $100 million hit from hack that led to data breach” how the company faced a hack that disrupted their internal systems. The platform Okta used by MGM observed that the threat actor demonstrated novel methods of lateral movement and defense evasion – the actor used deepfake audio to call the support desk to reset admin level credentials.

While Generative AI adversaries may become more advanced, these types of attacks can be prevented. There are a number of detection opportunities for defenders to consider for cybersecurity. How can companies be prepared for AI-generated media?

Real or AI?

Whether looking online for a news article or scrolling social media, it’s likely that an AI-generated image has been on the screen at some point. Many people use ChatGPT for help with writing, and AI-generated images and videos are becoming more and more popular. A few years ago, it was simple to tell the difference between an AI-generated paragraph and a human-written paragraph, but times are changing.

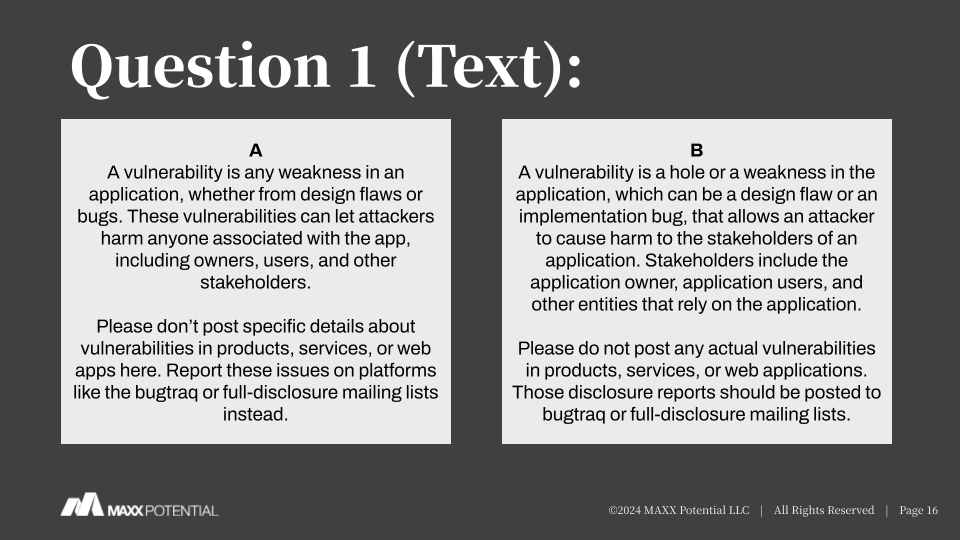

Can you spot the difference between AI-generated text and human-written text?

Recognizing the difference between real and Artificial Intelligence is becoming challenging, especially if the human that is prompting has carefully developed their ability to prompt well. A skillful prompt can generate text that is almost impossible to detect as AI generated.

Box A was AI-generated, and in our testing we’ve found only 25% of those guessing were able to correctly detect the text as AI. While this example is seemingly harmless, it points to a larger problem when analyzing emails, text messages, or news stories. Even AI detectors are proving faulty in helping with this determination, as they can commit both false-positive and false-negative errors.

The most helpful techniques remain critical thinking and search skills. A quick Internet search may reveal that other users are reporting a similar suspicious email or text, and this information can help better determine when to disregard. Critical thinking asks questions, analyzes different aspects, and doesn’t jump to conclusions.

A number of quizzes exist online where individuals can test their abilities in recognizing AI-generated media, whether its video, voice, image, or words. Interested in testing your ability to recognize AI in media? Take this Tidio quiz or try Which Face Is Real.

Unsurprisingly, individuals who have more access and experience with AI-generated content are often better at recognizing AI versus human content.

Understanding the Limitations of Artificial Intelligence & Empowering Your Workforce

AI is everywhere, and it still has limitations. Anyone who uses ChatGPT or another AI often knows that to receive great content they must craft a great prompt, and beyond that, some common limitations of Artificial Intelligence include content length, input quality, data privacy, and resource intensiveness. These limitations are important to know.

AI can do a lot, but when it comes to creating a long piece of content, whether text, audio, or video, it has a case of the hiccups. No matter the medium, when AI works on creating a longer piece of content, it runs into a number of problems such as repeating itself, losing flow, changing audio accent, altering the speed, strange distortions, or using incorrect physical motions that don’t match. Each medium deals with different AI-generated problems that need to be monitored and corrected by a human.

Another limitation with AI deals with input quality as well as content length. A 10-second clip of someone saying “Hello? Hello? Anyone there? Hello?” can be used to train AI for an audio voice; however, that clip doesn’t offer the breadth of accent and tone that a longer conversation would. The resulting audio clip will sound stilted and fake.

Some newer releases of AI have celebrated their ability to respond in real-time, and while some are decent, many leave us hanging for a while. Humans typically want the information they’re seeking as quickly as possible. Meanwhile, there’s the issue of resource intensiveness; while lower cost, effective models are coming out, the environmental impact is unknown.

AI often seems like the answer to every problem, and it’s also easy to get caught up in the idea that artificial intelligence will replace every job. Despite its impressive capabilities, the real strength in using AI lies in the user’s judgment. It’s the human ability to discern when and how to use AI that determines its effectiveness. By leveraging AI as a tool rather than a solution in itself, users can maximize its potential while maintaining the critical thinking and adaptability that only humans can provide.

Building AI Awareness

As shared earlier, individuals who interact with artificial intelligence often are more likely to recognize AI-generated content than those who don’t. We tested this internally, and we discovered a moderate positive relationship between familiarity with generative AI on our AI detection quiz. The correlation is not considered strong, but it does demonstrate that familiarity helps though it’s not necessarily the sole determinant. Familiarity helps. Consider finding ways to allow employees to build familiarity with artificial intelligence. Working with AI can even be gamified so that workers enjoy the process of understanding AI.

Continuous Learning & Adaptation

Again and again, you’ll hear that people will not be replaced by AI, but the replacement will be a person who uses the tool of AI. Lean into learning about AI and finding ways to bring it into your work role. As Gino Wickman says, “Systemize the predictable so you can humanize the exceptional.”

Balance Demos with Hands On Experience

Watching videos and live presentations have their place in helping people be educated about artificial intelligence, and each person will learn so much more by diving into the content on their own. The best thing to offer workers is access to hands-on experience with AI, alongside the knowledge of what responsible use looks like. Empower your employees to be up to date and prepared for AI-generated adversaries.

The introduction of artificial intelligence into the tech industry and the rest of the world has altered so much of the internet even now, and the changes will keep coming. Preparing your workforce for AI-generated adversaries starts with working with artificial intelligence and learning about what it’s capable of through all types of media. AI will continue to evolve, and so must we.

At MAXX Potential, our team is committed to staying at the forefront of technology, artificial intelligence, and automation. If you are interested in talking to us about a project that your team is working on, please reach out to MAXXpotential.com/contact.

MORE POSTS

Why Smart Leaders Focus on Output, Not Headcount

The Invisible Drag: Why Your Team Works Hard but Can’t Get Ahead

By MAXX Potential When Brad decided to build his career in IT, he knew he needed a blueprint. What he didn’t know was that his